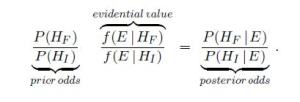

I saw some tweets last night alluding to a technique for Bayesian forensics, the basis for which published papers are to be retracted: So far as I can tell, your paper is guilty of being fraudulent so long as the/a prior Bayesian belief in its fraudulence is higher than in its innocence. Klaassen (2015):

“An important principle in criminal court cases is ‘in dubio pro reo’, which means that in case of doubt the accused is favored. In science one might argue that the leading principle should be ‘in dubio pro scientia’, which should mean that in case of doubt a publication should be withdrawn. Within the framework of this paper this would imply that if the posterior odds in favor of hypothesis HF of fabrication equal at least 1, then the conclusion should be that HF is true.” Now the definition of “evidential value” (supposedly, the likelihood ratio of fraud to innocent), called V, must be at least 1. So it follows that any paper for which the prior for fraudulence exceeds that of innocence, “should be rejected and disqualified scientifically. Keeping this in mind one wonders what a reasonable choice of the prior odds would be.”(Klaassen 2015)

Now the definition of “evidential value” (supposedly, the likelihood ratio of fraud to innocent), called V, must be at least 1. So it follows that any paper for which the prior for fraudulence exceeds that of innocence, “should be rejected and disqualified scientifically. Keeping this in mind one wonders what a reasonable choice of the prior odds would be.”(Klaassen 2015)

Yes, one really does wonder!

“V ≥ 1. Consequently, within this framework there does not exist exculpatory evidence. This is reasonable since bad science cannot be compensated by very good science. It should be very good anyway.”

What? I thought the point of the computation was to determine if there is evidence for bad science. So unless it is a good measure of evidence for bad science, this remark makes no sense. Yet even the best case can be regarded as bad science simply because the prior odds in favor of fraud exceed 1. And there’s no guarantee this prior odds ratio is a reflection of the evidence, especially since if it had to be evidence-based, there would be no reason for it at all. (They admit the computation cannot distinguish between QRPs and fraud, by the way.) Since this post is not yet in shape for my regular blog, but I wanted to write down something, it’s here in my “rejected posts” site for now.

Added June 9: I realize this is being applied to the problematic case of Jens Forster, but the method should stand or fall on its own. I thought rather strong grounds for concluding manipulation were already given in the Forster case. (See Forster on my regular blog). Since that analysis could (presumably) distinguish fraud from QRPs, it was more informative than the best this method can do. Thus, the question arises as to why this additional and much shakier method is introduced. (By the way, Forster admitted to QRPs, as normally defined.) Perhaps it’s in order to call for a retraction of other papers that did not admit of the earlier, Fisherian criticisms. It may be little more than formally dressing up the suspicion we’d have in any papers by an author who has retracted one(?) in a similar area. The danger is that it will live a life of its own as a tool to be used more generally. Further, just because someone can treat a statistic “frequentistly” doesn’t place the analysis within any sanctioned frequentist or error statistical home. Including the priors, and even the non-exhaustive, (apparently) data-dependent hypotheses, takes it out of frequentist hypotheses testing. Additionally, this is being used as a decision making tool to “announce untrustworthiness” or “call for retractions”, not merely analyze warranted evidence.

Klaassen, C. A. J. (2015). Evidential value in ANOVA-regression results in scientific integrity studies. arXiv:1405.4540v2 [stat.ME]. Discussion of the Klaassen method on pubpeer review: https://pubpeer.com/publications/5439C6BFF5744F6F47A2E0E9456703

Pingback: “Fraudulent until proved innocent: Is this really the new “Bayesian Forensics”? (rejected post) | Error Statistics Philosophy

‘V ≥ 1. Consequently, within this framework there does not exist exculpatory evidence.’

I suspect a mathematical error: Bayesian updating is a martingale.

Corey: Please explain, and/or update if you find the suspected math error.

So here we have two mutually exclusive and exhaustive hypotheses.The martingale property means that the predictive expectation of the posterior probability of an hypothesis is equal to the prior probability; this implies that if it’s possible for one of the hypotheses to receive a B-boost, then it is also possible for the other hypothesis to receive a B-boost. (Proving this is a few lines of algebra.)

Corey: Thanks, but I don’t think these are exhaustive–as cashed out in the analysis. (I admit that my hunch is based on a quick perusal, as I’ve no time now. Even if you think “fraud” and “innocence” are exhaustive (which I would question), the formally translated claims need not be. Hoping to learn from others who are immersed in the case.)

Doesn’t matter. However the hypothesis space is partitioned, one can take the disjunction of hypotheses that count as exculpation and the disjunction of hypotheses that count as condemnation, and then the martingale argument applies.

By the way, all of this is related to Jon Williamson’s argument against diachronic Dutch books; his whole line about evidence that we can know in advance will B-boost a specific hypothesis falls afoul of this same martingale property, rendering his argument an exercise in question-begging.

I don’t see what you mean. he gives the standard argument Bayesian philosophers give, as in the post on my blog. Let E be the assertion: P’(S) = .1.

P’ is prob at time t, P is prob now.

So at time t, P(E) > 0

But P(S|E) = 1, since P(S) = 1.

Now, Bayesian updating says

If P(E) > 0, then P’(. ) = P(. |E).

But at t’ we have P’(S) = .1,

which contradicts P’(S) = P(S| P’(S) = .1) = 1, by updating.

http://errorstatistics.com/2012/04/15/3376/

Mayo: So two things. First, that’s not the argument of Jon Williamson’s that I was thinking of; he has an argument against diachronic Dutch books which leans on the idea of some evidence to come in the future that is known in the present to B-boost one specific hypothesis. Second, it’s true that Bayesian probability is not a theory that describes an agent with imperfect memory, but that was never the aim; so who cares? Suffice it to say, I have very little respect for the acumen of the Bayesian philosophers you often discuss.

Corey: I thought this was an ex like that, and of course it’s been around for ages. If you get a chance, can you explain it more? Of course the examples are intended to show violations with Bayesian updating.

True, the Bayesian philosophers should be more involved in actual statistics, but some of the issues/arguments still apply to stat practice.

I gave a more extended analysis of Williamson’s argument in the comments here.

So I’ve read the paper, and what I don’t see there is a prior over the nuisance parameters (the correlations) and marginalization of said nuisance parameters. Instead I see an optimization of the likelihood ratio with respect to the nuisance parameters. So this isn’t (what I would call) a Bayesian analysis; it’s a likelihood ratio test of nested hypotheses without any error control — the ratio itself is treated as an evidential summary. (Actually the optimal ratio is only bounded, not computed explicitly, but that doesn’t really touch on the philosophical underpinnings of the approach.)

Thanks, my intuitive concerns stand. So are the priors basically informal, subjective assessments of strength of belief that author is a fraud vs innocent?

There are no priors in the paper. The prior odds are mentioned because the author justifies the relevance of the likelihood ratio in terms of the odds form of Bayes’s theorem, but the focus is on the likelihood ratio.

The Bayesian talk around V is irrelevant / misleading / wrong. The author proposes a frequentist test-statistic and use it in a frequentist way, with a significance level of alpha = 0.08. This in order to evaluate one “study”. He then goes on to combine many such tests in a conventional way (comparing number of significant outcomes to the binomial distribution with p = alpha). At this stage he uses a significance level of alpha = 0.02. This in order to evaluate ine “paper”.

The Bayesian talk is a smoke-screen. Some of it is nonsense.

That makes it sound like p-curves to discern p-hacking;. I may be running the general idea together with how its used in the Forster case.

But wait, is it correct that the likelihood for finding “suspicious” Vs increases with the number of Vs per study then? I think the authors of the response on the report wrote that in one paper the likelihood of finding 2 “suspicious” Vs out of computed 17 Vs by chance is 40%. Is this correct?

It is also stated here: https://twitter.com/richarddmorey/status/608422081693409281

It seems that with good old frequentist hypothesis testing came good old p-hacking. The authors of the UvA report use their own criteria very liberally. Here are a few examples of what they do when a study falls short of showing enough “incriminating” evidence:

1. Take in Vs lower than 6 as “substantive” (on p. 57, they refer to a V of 5.05 as “substantive”)

2. Take the higher-bound value of V instead of the lower-bound (on p. 82, a V between 3.84 and 12.38 is considered substantive).

3. Find a “suspicious” element post-hoc. For example, the paper K.JF.D10 has only one V above 6, but is listed in the “consider retraction” category because there is a SD=0 in one of the conditions and the authors felt it is “peculiar” (p. 78). (By the way, there is nothing “peculiar” about this SD=0 . In this study, participants classified metaphors and literal sentence as “metaphors” and “non-metaphors”. In the condition in questions, each of the 15 participants simply correctly classified five out of five non-metaphors as “non-metaphors”.)

4. If reported means do not provide enough high V values, try pooling together different conditions (e.g., p. 58).

Thanks for your response. That’s not a legitimate frequentist method; I wish people would stop calling mangled methods frequentist. The users clearly call this Bayesian, and for a reason: the prior beliefs are there to warrant the mangling.

Mayo, the users call it Bayesian but it isn’t Bayesian. V is just a frequentist test-statistic. The apparently Bayesian motivation behind it is incorrect. But the users use it in a frequentist way, anyway, so it doesn’t matter.

Why do you say this? Perhaps they call it Bayesian because it’s a questionable frequentist test from the perspective of controlling error probabilities or being able to say the inferences reached are evidentially warranted. The selection effects they complain about might also thereby disappear. I realize you’re much more familiar with the case, but I suspect this is a method intended to be applied when strong statistical evidence is lacking, but prior beliefs warrant suspicion. Why else invite the inevitable criticism?

We should soon see “statistical lawyers” whom you can hire to defend yourself against charges. You think?

(1) It is not a questionable frequentist test. They can and do control error probabilities. (You may disagree with their choice of significance level). In fact there are two levels here: first of all a test statistic based on a single “study”, V. Then a test statistic for a whole paper: here, they look at the number of studies for which V exceeds some threshold. Effectively they are using a significance level of 8% at the first stage and 2% at the second stage. (One might of course criticise those choices. As they say: “one should use those criteria wisely”).

(2) The supremum of the likelihood over a composite hypothesis divided by the likelihood of a simple hypothesis is not a likelihood ratio, in the Bayesian sense. It is (of course) the standard procedure for constructing “generalised likelihood ratio tests” in frequentist statistics. The discussion about “evidence” and “hypotheses” is confused. There is no prior.

I’m afraid that they invite inevitable criticism because their Bayesian argumentation, which is used only to motivate the definition of V, is (IMHO) muddled, wrong. But it is superfluous! Easily replaceable by an ordinary and sensible frequentist motivation.

Their actual statistical analyses (frequentist) are careful, thorough, sensible. Completely independent of the Bayesian window-dressing given at the beginning. Supported by extensive data-analysis.

PS there is further discussion on the technical aspects here: https://pubpeer.com/publications/5439C6BFF5744F6F47A2E0E9456703

Henk Kroost: the chance of finding 2 or more “substantial” V’s out of 17, assuming independence and that the chance of “substantial” at each of the 17 trials is 0.0809, is indeed 40%:

1 – pbinom(1, 17, 0.0809)

[1] 0.4050597

It should be noted that “V” is rather conservative. “0.0809” is an upper bound. So 40% is also an upper bound. One should do simulation experiments with parameters matching the actual situation to find out if this “accusation” is valid.

By the way, if some SDs are zero and others are not, because in fact the data itself is highly discrete and in some situation all respondents give the same answer, then the use of standard ANOVA machinery, F-tests and so on, is highly discutable. It means that the scientific conclusions of the original paper in question are not reliable.

Here is another example from social psychology:

https://pubpeer.com/publications/D9E7062DA9161CDA79C1D3E64C2DE9

A “hot chili sauce” experiment.

The result to which the SD=0 belongs is not the main focus of the study. The focus is reaction times, not response rate. When the focus is RT, researchers often set the study to have very high accuracy rates. So the conclusions of the paper do not depend on that result.

BTW: Wouldn’t you say that an SD = 0 of the sort we are talking about also means that you cannot meaningfully compute V?

An SD = 0 is impossible under the statistical assumptions needed in order to justify the usual ANOVA F tests. It seems to me that if the authors of these papers in social psychology think that formal significance at the 5% level is strong enough evidence to publish the research findings as supporting the accompanying scientific theory, then formal significance at the 5% level of a test based on V is enough to discredit that scientific claim.

You can’t have it both ways.

By the way, in Liberman and Denzler’s response to KPW, they explain that the actual design of their experiments is often not the same as the “nominal” design in the SPPS generated ANOVA in their paper. For instance: they don’t randomise subjects to treatment groups: they separate them into homogenous groups (e.g. according to sex) and then randomised each group separately. So actually they have a randomised block design with a couple more factors beyond the factors they told SPSS about; and actually the statistical model behind their own ANOVA is incorrect, by their own design choices.

Maybe this kind of “over-design” is what is generating the “too good to be true” statistics.

Dear Richard,

I do not feel that i know enough to discuss how social psychologists in general use statistics. It is probably true that some of us make mistakes in stats. I wanted to discuss the specific instance of the DV for which a SD=0 emerged in one of the conditions and a high V was calculated in the report.

The authors of the original paper were wrong to analyze it with an ANOVA. They were probably ignorant in stats. This, however, is not consequential for their paper, because the DV in question was not the main DV of the study.

The authors of the report were wrong to analyze this DV with the V test. Would you agree on that? They wre probably NOT ignorant in stats. This IS consequential, because they counted this as two “pieces of evidence”: a high V (which they should not have calculated in the first place) and a SD of 0 which they found peculiar (but in fact is fairly normal). This and only this placed this paper in the “consider retraction” category.

(I am Nira Liberman, not sure why my previous reply was posted as “anonymous”)

Nira: Your first comment did come through with your name. This one shows “someone” but I’ve never seen wordpress supply that name or any name on its own. Please try again. I take it you are not alluding to a paper in which you are co-author. Thank you very much for commenting, i hope people can get to the bottom of this.

Dear Nira,

You may well be right. Which paper exactly are you referring to? I did not study everything in detail (and to be honest, I don’t want to).

Everybody makes mistakes, including PKW. I think that the UvA should not have adopted the conclusions of PKW “just like that”.

Richard

Perhaps it is worth mentioning why the authors associate their approach with forensic statistics. Current orthodoxy has it, that the judge or jury have the prior, the statistician must only inform the court of the likelihood ratio. However, the hypotheses of prosecution and defence are typically both composite hypotheses. Hence the statistician will have to do two separate Bayesian analyses, picking prior distributions over all unknown parameters in both of the two hypotheses.

Replacing Bayesian averaging over the prosecution’s parameter space by a maximum is wrong. If the statistician is reluctant to specify a prior, the only fair thing to do is to take a minimum.

Klaassen argues that though in a criminal court you are innocent till proven guilty, in a scientific court you are guilty till proven innocent. But when a powerful organisation “requests” publishers to retract published papers written by third persons, we are not in the realm of science: we are effectively in the realm of criminal law.

I’m obviously completely unaware of “current orthodoxy” in forensics statistics! What? the statistician merely informs on the likelihood ratio and the judge or jury supply the prior? What happened to the error probabilities? These LRs don’t even exhaust. I concur that this becomes a policy/legal question, which is why I said maybe we’ll start seeing stat lawyers. I would recommend hiring one in frequentist error statistics. Maybe in this connection, it’s time to reread Laudan’s post on the presumption of innocence not being a Bayesian prior.

http://errorstatistics.com/2013/07/20/guest-post-larry-laudan-why-presuming-innocence-is-not-a-bayesian-prior/

The orthodox view:

http://www.wiley.com/WileyCDA/WileyTitle/productCd-0470843675.html

Statistics and the Evaluation of Evidence for Forensic Scientists, 2nd Edition

C. G. G. Aitken, Franco Taroni

ISBN: 978-0-470-84367-3

540 pages

August 2004

There is a vociferous minority which argues that the statistician has to go full Bayes. Norman Fenton is an eloquent exponent of this point of view.

The orthodox viewpoint has become official policy of many scientific and legal organisations.

By the way, the just mentioned book does appear to follow a likelihood ratio / Bayesian approach but again and again uses “plug-in” frequentist estimates to avoid the problem of composite hypotheses.

… but plugging-in a frequentist estimate into a Bayesian likelihood is a hybrid approach which at best might be considered some rough and ready approximation, at worst can cause unacceptable bias. If the prosecution is allowed to pick parameter values after seeing the evidence in order to maximise the likelihood of their hypothesis, they are cheating … But this is essentially what Klaassen is doing, if you take his Bayesianism seriously. But the Bayesian motivation for looking at V is a red herring, if we simply accept that V is a sensible frequentist test statistic, and investigate its frequentist properties directly. Which is what Klaassen and PKW also do, anyway.

Richard: The more I here you on this, the more confused I am about the method. That may be because it’s coming in in pieces. If you’d be willing to put together your remarks they could form a really interesting guest post (on either of my blogs, but almost no one comes to the rejected posts unless I send them.)

Mayo, have you tried actually reading Klaassen (2014)? See the text between formulas (13) and (14). H_F is a composite hypothesis. So in f(E | H_F) you have to average over the unknown parameters in H_F.

I didn’t check the references Zhang (2009) or Bickel (2011) yet, but I don’t trust them. The authors work in classical frequentist mathematical statistics, they prove limit theorems about sampling distributions.

I may write a mathematical note about it sometime. If you want a nice blog post you could try asking Andrew Gelman.

I have not only “tried actually reading” I have ACTUALLY read it. That was the difference between having anything on it in my “rejected” posts vs regular blog. Gelman has his own blog, and besides, I wanted the material from you, never mind.

Now I did check Zhang (2009) and Bickel (2011). The authors were a different Zhang and Bickel from the ones I expected. I will try to write up what I find, first on PubPeer, which I think is the right place to discuss the technical issues: https://pubpeer.com/publications/5439C6BFF5744F6F47A2E0E9456703

My initial impression is that these two works are conceptually flawed.

http://arxiv.org/abs/1506.07447 Fraud detection with statistics: A comment on “Evidential Value in ANOVA-Regression Results in Scientific Integrity Studies” (Klaassen, 2015) by Hannes Matuschek

Abstract: Klaassen in (Klaassen 2015) proposed a method for the detection of data manipulation given the means and standard deviations for the cells of a oneway ANOVA design. This comment critically reviews this method. In addition, inspired by this analysis, an alternative approach to test sample correlations over several experiments is derived. The results are in close agreement with the initial analysis reported by an anonymous whistleblower. Importantly, the statistic requires several similar experiments; a test for correlations between 3 sample means based on a single experiment must be considered as unreliable.

Click to access barnard1967.pdf