You are no longer bound to traditional clinical trials: 21st Century Cures!

I was just sent this in my email. Knowing nothing about it, and unable to comment, it’s here in my Rejected Posts alternative blog. I’m keen to hear what others, familiar with it, think of this 21st Century Cures Act. (It reminds me of the Doors, 20th Century Fox). Actually, I think this was passed in 2016, so it’s not big news. Of course the push for “personalized medicine is part of this, never mind that it hasn’t had much success yet. I would have thought observational studies were at least admissible before. Do they require adjustments for data-dredging? Or not? I’m glad they will mention “pitfalls”. Now I’m going to have to ask my doctor which type of study a drug has had its approval based upon.

Thanks to the 21st Century Cures Act you are no longer bound to traditional clinical trials to prove safety and efficacy — new and exciting alternatives are available to you!

Observational studies are now acceptable in the FDA’s approval process. These studies allow the collection of real-world usage information from patients and physicians. And they allow for the harvesting of data from existing studies — from university research to patient data registries — to use as evidence of a product’s efficacy and safety (my emphasis).

Observational research requires an entirely different set of procedures and careful planning to ensure the real-world evidence collected is valid and reliable.

The 21st Century Take on Observational Studies walks you through everything you need to know about the opportunities and pitfalls observational studies can offer. The report looks at the growing trend toward observational research and how provisions in the 21st Century Cures Act create even more incentives to rely on real-world evidence in the development of medical products. The report covers:

- Provisions of the 21st Century Cures Act related to observational studies and gathering of real-world evidence

- The evolution of patient-focused research

- How observational studies can be used in the preapproval and postmarket stages

- The potential for saving time and money

- New data sources that make observational studies a viable alternative to clinical trials

- How drug- and devicemakers view observational research and how they are using it

Order your copy of The 21st Century Take on Observational Studies: Using Real-World Evidence in the New Millennium and learn effective uses of observational studies in both the preapproval and postmarket phases; how to identify stakeholders and determine what kind of data they needed, and how the FDA’s view on observational research is evolving.

Msc Kvetch: “Stat Fact?”: Scientists do and do not want subjective probability reports

Facts are true claims, in contrast with mere opinions, or normative claims. John Cook supplies this “stat fact”: scientist do and do not want subjective posterior probabilities. Which is it? And are these descriptions of the different methods widely accepted “facts”? I’ve placed this in my “rejected posts” (under msc kvetching” simply because I don’t take this seriously enough to place on my regular blog.

Putting the methods you use into context

It may come as a surprise, but the way you were probably taught statistics during your undergraduate years is not the way statistics is done. …It might be disconcerting to learn that there is no consensus amongst statisticians about what a probability is for example (a subjective degree of belief, or an objective long-run frequency?). …..

Bayesian

Bayesian methods are arguably the oldest; the Rev. Thomas Bayes published his theorem (posthumously) in 1764. …The controversial part of Bayesian methodology is the prior information (which is expressed as a distribution and often just referred to as “a prior”), because two people may have different prior knowledge or information and thus end up with a different result. In other words, individual knowledge (or subjective belief) can be combined with experimental data to produce the final result. Probabilities are therefore viewed as personal beliefs, which naturally differ between individuals. This doesn’t sit well with many scientists because they want the data “to speak for themselves”, and the data should give only one answer, not as many answers as there are people analysing it! It should be noted that there are also methods where the prior information is taken from the data itself, and are referred to as empirical Bayesian methods, which do not have the problem of subjectivity. ….The advantage of Bayesian methods is that they can include other relevant information and are therefore useful for integrating the results of many experiments. In addition, the results of a Bayesian analysis are usually what scientists want, in terms of what p-values and confidence intervals represent.

Note that the references include only critics of standard statistical methods; not even Cox is included. Stat fact: this is not a statistically representative list?

Souvenirs from “the improbability of statistically significant results being produced by chance alone”-under construction

I extracted some illuminating gems from the recent discussion on my”Error Statistics Philosophy” blogpost, but I don’t have time to write them up, and won’t for a bit, so I’m parking a list of comments wherein the golden extracts lie here; it may be hard to extricate them from over 120 comments later on. (They’re all my comments, but as influenced by readers.) If you do happen wander into my Rejected Posts blog again, you can expect various unannounced tinkering on this post, and the results may not be altogether grammatical or error free. Don’t say I didn’t warn you.

I’m looking to explain how a frequentist error statistician (and lots of scientists) understand

Pr(Test T produces d(X)>d(x); Ho) ≤ p.

You say ” the probability that the data were produced by random chance alone” is tantamount to assigning a posterior probability to Ho, based on a prior) and I say it is intended to refer to an ordinary error probability. The reason it matters isn’t because 2(b) is an ideal way to phrase the type 1 error prob or the attained significance level. I admit it isn’t ideal But the supposition that it’s a posterior leaves one in the very difficult position of defending murky distinctions, as you’ll see in my next thumb’s up and down comment.

You see, for an error statistician, the probability of a test result is virtually always construed in terms of the HYPOTHETICAL frequency with which such results WOULD occur, computed UNDER the assumption of one or another hypothesized claim about the data generation. These are 3 key words.

Any result is viewed as of a general type, if it is to have any non-trivial probability for a frequentist.

Aside from the importance of the words HYPOTHETICAL and WOULD is the word UNDER.

Computing {d(X) > d(x)} UNDER a hypothesis, here, Ho, is not a conditional probability.** This may not matter very much, but I do think it makes it difficult for some to grasp the correct meaning of the intended error probability.

OK, well try your hand at my next little quiz.

…..

**See double misunderstandings about p-valueshttps://normaldeviate.wordpress.com/2013/03/14/double-misunderstandings-about-p-values/

———————————————-

Thumbs up or down? Assume the p-value of relevance is 1 in 3 million or 1 in 3.5 million. (Hint: there are 2 previous comments of mine in this post of relevance.)

- only one experiment in three million would see an apparent signal this strong in a universe [where Ho is adequate].

- the likelihood that their signal was a result of a chance fluctuation was less than one chance in 3.5 million

- The probability of the background alone fluctuating up by this amount or more is about one in three million.

- there is only a 1 in 3.5 million chance the signal isn’t real.

- the likelihood that their signal would result by a chance fluctuation was less than one chance in 3.5 million

- one in 3.5 million is the likelihood of finding a false positive—a fluke produced by random statistical fluctuation

- there’s about a one-in-3.5 million chance that the signal they see would appear if there were [Ho adequate].

- it is 99.99997 per cent likely to be genuine rather than a fluke.

They use likelihood when they should mean probability, but we let that go.

The answers will reflect the views of the highly respected PVPs–P-value police.

—————————————————

THUMBS UP OR DOWN ACCORDING TO THE P-VALUE POLICE (PVP)

1. only one experiment in three million would see an apparent signal this strong in a universe [where Ho is adequately describes the process].

up

- the likelihood that their signal was a result of a chance fluctuation was less than one chance in 3.5 million

down - The probability of the background alone fluctuating up by this amount or more is about one in three million.

up - there is only a 1 in 3.5 million chance the signal isn’t real.

down - the likelihood that their signal would result by a chance fluctuation was less than one chance in 3.5 million

up - one in 3.5 million is the likelihood of finding a false positive—a fluke produced by random statistical fluctuation

down (or at least “not so good”) - there’s about a one-in-3.5 million chance that the signal they see would appear if there were no genuine effect [Ho adequate].

up - it is 99.99997 per cent likely to be genuine rather than a fluke.

down

I find #3 as a thumbs up especially interesting.

The real lesson, as I see it, is that even the thumbs up statements are not quite complete in themselves, in the sense that they need to go hand in hand with the INFERENCES I listed in an earlier comment, and repeat below. These incomplete statements are error probability statements, and they serve to justify or qualify the inferences which are not probability assignments.

In each case, there’s an implicit principle (severity) which leads to inferences which can be couched in various ways such as:

Thus, the results (i.e.,the ability to generate d(X) > d(x)) indicate(s):

- the observed signals are not merely “apparent” but are genuine.

- the observed excess of events are not due to background

- “their signal” wasn’t (due to) a chance fluctuation.

- “the signal they see” wasn’t the result of a process as described by Ho.

If you’re a probabilist (as I use that term), and assume that statistical inference must take the form of a posterior probability*, then unless you’re meticulous about the “was/would” distinction you may fall into the erroneous complement that Richard Morey aptly describes. So I agree with what he says about the concerns. But the error statistical inferences are 1,3,5,7 along with the corresponding error statistical qualification.

For this issue, please put aside the special considerations involved in the Higgs case. Also put to one side, for this exercise at least, the approximations of the models. If we’re trying to make sense out of the actual work statistical tools can perform, and the actual reasoning that’s operative and why, we are already allowing the rough and ready nature of scientific inference. It wouldn’t be interesting to block understanding of what may be learned from rough and ready tools by noting their approximative nature–as important as that is.

*I also include likelihoodists under “probabilists”.

****************************************************

Richard and everyone: The thumb’s up/downs weren’t mine!!! The are Spiegelhalter’s!

http://understandinguncertainty.org/explaining-5-sigma-higgs-how-well-did-they-do

I am not saying I agree with them! I wouldn’t rule #6 thumbs down, but he does. This was an exercise in deconstructing his and similar appraisals, (which are behind principle #2) in order to bring out the problem that may be found with 2(b). I can live with all of them except #8.

Please see what I say about “murky distinctions” in the comment from earlier:

http://errorstatistics.com/2016/03/12/a-small-p-value-indicates-its-improbable-that-the-results-are-due-to-chance-alone-fallacious-or-not-more-on-the-asa-p-value-doc/#comment-139716

****************************************

PVP’s explanation of official ruling on #6

****************************************

The insights to take away from this thumb’s up:

3. The probability of the background alone fluctuating up by this amount or more is about one in three million.

Given that the PVP are touchy about assigning probabilities to “the explanation” it is noteworthy that this is doing just that. Isn’t it?*

Abstract away as much as possible from the particularities of the Higg’s case, which involves a “background,” in order to get at the issue.

3′ The probability that chance variability alone (or the perhaps the random assignment of treatments) produces a difference as or larger than this is about one in 3 million. (The numbers don’t matter.)

In the case where p is very small, the “or larger” doesn’t really add any probability. The “or larger” is needed for BLOCKING inferences to real effects by producing p-values that are not small. But we can keep it in.

3” The probability that chance alone produces a difference as larger or larger than observed is 1 in 3 million (or other very small value).

3”’The probability that a difference this large or larger is produced by chance alone is 1 in 3 million (or other very small value).

I see no difference between 3, 3′, 3” and p”’. (The PVP seem forced into murky distinctions.)

For a frequentist who follows Fisher in avoiding isolated significant results, the “results” = the ability to produce such statistically significant results.

*Qualification: It’s never what the PVP called “explanation” alone, nor the data alone,at least for a sampling theorist-error statistician. It’s the overall test procedure,or even better: my ability to reliably bring about results that are very improbable under Ho”. I render it easy to bring about results that would be very difficult under Ho.

See also my comment below:

The mistake is in thinking we start with the probabilistic question Richard states. I say we don’t. I don’t.

*********************************************

Here it is:

Richard: I want to come back to your first comment:

You wrote:

if I ask “What is the probability that the symptoms are due to heart disease?” I’m asking a straightforward question about whether the probability that the symptoms are caused by an actual case of heart disease, not the probability that I would see the symptoms assuming I had heart disease.

My reply: Stop right after the first comma, before “not the probability.” The real question of interest is: are these symptoms caused by heart disease (not the probability they are).

In setting out to answer the question suppose you found that it’s quite common to have even worse symptoms due to indigestion and no heart disease. This indicates it’s readily explainable without invoking heart disease. That is, in setting out to answer a non-probabilistic question* you frame it as of a general type, and start asking how often would this kind of thing be expected under various scenarios. You appeal to probabilistic considerations to answer your non-probabilistic question, and when you amass enough info, you answer it. Non-probabilistically.

Abstract from the specifics of the example on heart disease which brings in many other considerations.

*You know the answers are open to error, but that doesn’t make the actual question probabilistic.

*********************************

Mar 20, 2016: Look at #1:

- only one experiment in three million would see an apparent signal this strong in a universe [where Ho is adequate/true].

a. This is a big thumb’s up for the PVP. Now I do have one beef with this, and that’s the fact it doesn’t say that the apparent signal is produced by, or because of, or due to, whatever mechanism is described by Ho. This is important, because unless this production connection is there, it’s not an actual p-value. (I’m thinking of that Kadane chestnut on my regular blog where some “result” (say a tsunami) is very improbable, and it’s alleged one can put any “null” hypothesis Ho at all to the right of “;” (e.g., no Higgs particle), and get a low p-value for Ho.

The p-value has to be computable because of Ho, that is, Ho assigns the probability to the results.b. Now consider: “an apparent signal this strong in a universe where Ho is the case”. The blue words are what the particle physicist means by a “fluke” of that strength. So we get a thumb’s up to:

only one experiment in three million would see a fluke this strong (i.e., under Ho)

or

the probability of seeing a 5 sigma fluke is one in three million.

Laurie: I get you’re drift, and now I see that it arose because of some very central confusions between how different authors of comments on the ASA doc construed the model. I don’t want to relegate that to a mere problem of nomenclature or convention, but nevertheless, I’d like to take it up another time and return to my vacuous claim.

The Pr(P < p; Ho) = p (very small). Or

(1): Pr(Test T yields d(X) > d(x); Ho) = p (very small) This may be warranted by simulation or analytically, so not mere mathematics, and it’s always approximate, but I’m prepared to grant this.

Or, to allude to Fisher:

The probability of bringing about such statistically significant results “at will”, were Ho the case, is extremely small.

Now for the empirical and non-vacuous part (which we are to spoze is shown):

Spoze

(2): I do reliably bring about stat sig results d(X) > d(x). I’ve shown the capability of bringing about results each of which would occur in, say, 1 in 3 million experiments UNDER the supposition that Ho.

(It’s not even the infrequency that matters, but the use of distinct instruments with different assumptions, where errors in one are known to ramify in the at least one other)

I make the inductive inference (which again, can be put in various terms, but pick any one you like):

(3): There’s evidence of a genuine effect.

The move from (1) and (2) to inferring (3) is based on

(4): Claim (3) has passed a stringent or severe test by dint of (1) and (2). In informal cases, the strongest ones, actually, this is also called a strong argument from coincidence.

(4) is full of content and not at all vacuous, as is (2). I admit it’s “philosophical” but also justifiable on empirical grounds. But I won’t get into those now.

These aren’t all in chronological, but rather (somewhat) logical order. What’s the upshot? I’ll come back to this. Feel free to comment.

Can today’s nasal spray influence yesterday’s sex practices? Non-replication isn’t nearly critical enough: Rejected post

Sit down with students or friends and ask them what’s wrong with this study–or just ask yourself–and it will likely leap out. Now I’ve only read the paper quickly, and know nothing of oxytocin (OT) research. That’s why this is in the “Rejected Posts” blog. Plus, I’m writing this really quickly.

You see, I noticed a tweet about how non-statistically significant results are often stored in filedrawers, rarely published, and right away was prepared to applaud the authors for writing on their negative result. Now that I see the nature of the study, and the absence of any critique of the experiment itself (let alone the statistics), I am less impressed. What amazes me about so many studies is not that they fail to replicate but that the glaring flaws in the study aren’t considered! But I’m prepared to be corrected by those who do serious oxytocin research.

In a nutshell: Treateds get OT nasal spray, controls get a placebo spray; you’re told they’re looking for effects on sexual practices, when actually they’re looking for effects on trust bestowed upon experimenters with your answers).

The instructions were the follows: “You will now perform a task on the computer. The instruction concerning this task will appear on screen but if you have any question, do not hesitate. At the end of the computer test, you will have to fill a questionnaire that is in the envelope on your desk. As we want to examine if oxytocin has an influence on sexual practices and fantasies, do not be surprised by the intimate or awkward nature of the questions. Please answer as honestly as possible. You will not be judged. Also do not be afraid of being sincere in your answers, I will not look at your questionnaire, I swear it. It will be handled by one of the guy in charge of the optical reading device who will not be able to identify you (thanks to the coding system). At the end of the experiment, I will bring him all the questionnaires. I will just ask you to put the questionnaire back in the envelope once it is completed. You may close the envelope at the end and, if you want, you may even add tape. There is a tape dispenser on your desk”. There is some examples of questions they were asked to answer: “What was your wildest sex experiment ?”, “Are you satisfied with your sex life? Could you describe it? (frequency, quality,…)” Please report on a 7-point Likert scale (1 = not at all, it disgusts me à 7 = very much, I really like) your willingness to be involved in the following sexual practices: using sex toys, doing a threesome, having sex in public, watch other people having sex, watch porn before or during a sexual intercourse,…”

Imagine you’re a subject in the study. Is there a reason to care if the researcher knows details of your sex life? The presumption is that you do care. But anyone who really cared wouldn’t reveal whatever they deemed so embarrassing. But wait, there’s another crucial element to this experiment.

We’re told: “we want to examine if oxytocin has an influence on sexual practices and fantasies“. You’ve been sprayed with either OT or placebo, and I assume you don’t know which. Suppose OT does influence willingness to engage in wild sex experiments. Being sprayed today couldn’t very well change your previous behavior. So unless they had asked you last week (without spray) and now once again with spray, they can’t be looking for changes on actual practice. But OT spray could make you more willing to say you’re more willing to engage in “the following sexual practices: using sex toys, doing a threesome, having sex in public,…etc. etc.” It could also influence feelings right now, i.e., how satisfied you feel now that you’ve been “treated”. So since the subject reasons this must be the effect they have in mind, only scores on the “willingness” and “current feelings” questions could be picking up on the OT effect. But high numbers on willingness and feelings questions don’t reflect actual behaviors–unless the OT effect extends to exaggerating about past behaviors, that is, lying about them, in which case, once again, your own actual choices and behaviors in life are not revealed by the questionnaire. Given the tendency of subjects to answer as they suppose the researcher wants, I can imagine higher numbers on such questions (than if they weren’t told they’re examining if OT has an influence on sexual practices). But since the numbers don’t, indeed, can’t reflect true effects on sexual behavior, there’s scarce reason to regard them as private information revealed only to experimenters you trust. I’ll bet almost no one uses the tape*.

There are many, alternative criticisms of this study. For example, realizing they can’t be studying the influence of sex practices, you already mistrust the experimenter. Share yours.

Let me be clear: I don’t say OT isn’t related to love and trust––it’s active in childbirth, nursing, and, well…whatever. It is, after all, called the ‘love hormone’. My kvetch is with the capability of this study to discern the intended effect.

I say we need to publish analyses showing what’s wrong with the assumption that a given experiment is capable of distinguishing the “effects” of the “treatment” of interest. And what about those Likert scales! These aren’t exactly genuine measurements merely because they’re quantitative.

*It would be funny to look for a correlation between racy answers and tape.

A non-statistically significant difference strengthens or confirms a null, says Fisher (1955)

In Fisher (1955) [from “the triad”]: “it is a fallacy, so well known as to be a standard example, to conclude from a test of significance that the null hypothesis thereby established; at most it may be said to be confirmed or strengthened.”

I just noticed the last part of this sentence, which I think I’ve missed in a zillion readings, or else it didn’t seem very important. People erroneously think Fisherian tests can infer nothing from non-significant results, but I hadn’t remembered that Fisher himself made it blatant–even while he is busy yelling at N-P for introducing the Type 2 error! Neyman and Pearson use power-analytic reasoning to determine how well the null is “confirmed”. If POW(μ’) is high, then a non-statistically significant result indicates μ≤ μ’.

On what “evidence-Based Bayesians” like Gelman really mean: rejected post

How to interpret subjective Bayesians who want to be hard-nosed Bayesians is often like swimming round and round in a funnel of currents where there’s nothing to hold on to. Well, I think I’ve recently stopped the flow and pegged it. Christian Hennig and I have often discussed this (on my regular blog) and something Gelman posted today, linked me to an earlier exchange between he and Christian.

Christian: I came across an exchange between you and Andrew because it was linked to by Andrew on a current blog post

It really brings out the confusion I have had, we both have had, and which I am writing about right now (in my book), as to what people like Gelman mean when they talk about posterior probabilities. First:

a posterior of .9 to

H: “θ is positive”

is identified with giving 9 to 1 odds on H.

Gelman had said: “it seems absurd to assign a 90% belief to the conclusion. I am not prepared to offer 9 to 1 odds on the basis of a pattern someone happened to see that could plausibly have occurred by chance”

Then Christian says, this would be to suggest “I don’t believe it” means “it doesn’t agree with my subjective probability” and Christian doubts Andrew could mean that. But I say he does mean that. His posterior probability is his subjective (however evidence-based) probability. ‘

Next the question is, what’s the probability assigned to? I think it is assigned to H:θ > 0

As for the meaning of “this event would occur 90% of the time in the long run under repeated trials” I’m guessing that “this event” is also H. The repeated “trials” allude to a repeated θ generating mechanism, or over different systems each with a θ. The outputs would be claims of form H (or not-H or different assertions about the θ for the case at hand ), and he’s saying 90% of the time the outputs would be H, or H would be the case. The outputs are not ordinary test results, but states of affairs, namely θ > 0.

Bottom line: It seems to me that all Bayesians all who assign posteriors to parameters (aside from empirical Bayesians) really mean the kind of odds statement that you and I and most people associate with partial -belief or subjective probability. “Epistemic probability” would do as well, but equivocal. It doesn’t matter how terrifically objectively warranted that subjective probability assignment is, we’re talking meaning. And when one finally realizes this is what they meant all along, everything they say is less baffling. What do you think?

——————————————–

Background

Andrew Gelman:

First off, a claimed 90% probability that θ>0 seems too strong. Given that the p-value (adjusted for multiple comparisons) was only 0.2—that is, a result that strong would occur a full 20% of the time just by chance alone, even with no true difference—it seems absurd to assign a 90% belief to the conclusion. I am not prepared to offer 9 to 1 odds on the basis of a pattern someone happened to see that could plausibly have occurred by chance,

Christian Hennig says:

“Then the data under discussion (with a two-sided p-value of 0.2), combined with a uniform prior on θ, yields a 90% posterior probability that θ is positive. Do I believe this? No.”

What exactly would it mean to “believe” this? Are you referring to a “true unknown” posterior probability with which you compare the computed one? How would the “true” one be defined?

Later there’s this:

“I am not prepared to offer 9 to 1 odds on the basis of a pattern someone happened to see that could plausibly have occurred by chance, …”

…which kind of suggests that “I don’t believe it” means “it doesn’t agree with my subjective probability” – but knowing you a bit I’m pretty sure that’s not what you meant before. But what is it then?

Fraudulent until proved innocent: Is this really the new “Bayesian Forensics”? (ii) (rejected post)

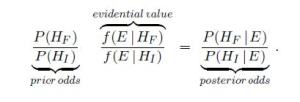

I saw some tweets last night alluding to a technique for Bayesian forensics, the basis for which published papers are to be retracted: So far as I can tell, your paper is guilty of being fraudulent so long as the/a prior Bayesian belief in its fraudulence is higher than in its innocence. Klaassen (2015):

“An important principle in criminal court cases is ‘in dubio pro reo’, which means that in case of doubt the accused is favored. In science one might argue that the leading principle should be ‘in dubio pro scientia’, which should mean that in case of doubt a publication should be withdrawn. Within the framework of this paper this would imply that if the posterior odds in favor of hypothesis HF of fabrication equal at least 1, then the conclusion should be that HF is true.” Now the definition of “evidential value” (supposedly, the likelihood ratio of fraud to innocent), called V, must be at least 1. So it follows that any paper for which the prior for fraudulence exceeds that of innocence, “should be rejected and disqualified scientifically. Keeping this in mind one wonders what a reasonable choice of the prior odds would be.”(Klaassen 2015)

Now the definition of “evidential value” (supposedly, the likelihood ratio of fraud to innocent), called V, must be at least 1. So it follows that any paper for which the prior for fraudulence exceeds that of innocence, “should be rejected and disqualified scientifically. Keeping this in mind one wonders what a reasonable choice of the prior odds would be.”(Klaassen 2015)

Yes, one really does wonder!

“V ≥ 1. Consequently, within this framework there does not exist exculpatory evidence. This is reasonable since bad science cannot be compensated by very good science. It should be very good anyway.”

What? I thought the point of the computation was to determine if there is evidence for bad science. So unless it is a good measure of evidence for bad science, this remark makes no sense. Yet even the best case can be regarded as bad science simply because the prior odds in favor of fraud exceed 1. And there’s no guarantee this prior odds ratio is a reflection of the evidence, especially since if it had to be evidence-based, there would be no reason for it at all. (They admit the computation cannot distinguish between QRPs and fraud, by the way.) Since this post is not yet in shape for my regular blog, but I wanted to write down something, it’s here in my “rejected posts” site for now.

Added June 9: I realize this is being applied to the problematic case of Jens Forster, but the method should stand or fall on its own. I thought rather strong grounds for concluding manipulation were already given in the Forster case. (See Forster on my regular blog). Since that analysis could (presumably) distinguish fraud from QRPs, it was more informative than the best this method can do. Thus, the question arises as to why this additional and much shakier method is introduced. (By the way, Forster admitted to QRPs, as normally defined.) Perhaps it’s in order to call for a retraction of other papers that did not admit of the earlier, Fisherian criticisms. It may be little more than formally dressing up the suspicion we’d have in any papers by an author who has retracted one(?) in a similar area. The danger is that it will live a life of its own as a tool to be used more generally. Further, just because someone can treat a statistic “frequentistly” doesn’t place the analysis within any sanctioned frequentist or error statistical home. Including the priors, and even the non-exhaustive, (apparently) data-dependent hypotheses, takes it out of frequentist hypotheses testing. Additionally, this is being used as a decision making tool to “announce untrustworthiness” or “call for retractions”, not merely analyze warranted evidence.

Klaassen, C. A. J. (2015). Evidential value in ANOVA-regression results in scientific integrity studies. arXiv:1405.4540v2 [stat.ME]. Discussion of the Klaassen method on pubpeer review: https://pubpeer.com/publications/5439C6BFF5744F6F47A2E0E9456703

Msc Kvetch: Why isn’t the excuse for male cheating open to women?

In an op-ed in the NYT Sunday Review (May 24, 2015), “Infidelity Lurks in Your Genes,” Richard Friedman states that:

We have long known that men have a genetic, evolutionary impulse to cheat, because that increases the odds of having more of their offspring in the world.

But now there is intriguing new research showing that some women, too, are biologically inclined to wander, although not for clear evolutionary benefits.

I’ve never been sold on this evolutionary explanation for male cheating, but I wonder why it’s assumed women wouldn’t be entitled to it as well. For the male’s odds of having more offspring to increase, the woman has to have the baby, so why wouldn’t the woman also get the increased odds of more offspring? It’s the woman’s offspring too. Moreover, the desire to have babies tends to be greater among women than men.